(This article was originally published in slightly different form in Game Developer magazine, May 2007)

The player guides his in-game character across a footbridge. A monster appears at the other end, so the player decides to turn around and go back. Instead he walks off the side of the bridge and falls to his death. Who is at fault here? Was it the player for not mastering the controls? Was it the level designer for making the bridge too narrow? Was it the animator for making the walk stride too long? Was it the programmer who implementing the controls? Or was it the game designer for specifying the controls, bridge, and animations this way?

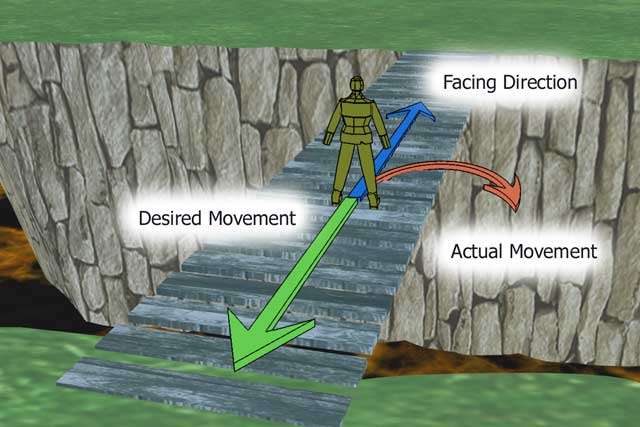

Figure 1 – The player is facing forwards along the bridge, he then indicates backwards. But because movement is constrained by the facing direction, the player runs in a circle and falls to his doom.

Well, first of all it’s not the player. They did not buy this game to enjoy mastering the tricky art of turning around on a footbridge. Secondly, assigning individual blame is not helpful. Everyone listed had a role leading up to this slight disappointment for the player, and the end result is due to the interaction of all their efforts. This article examines, mostly from a programmer perspective, how to deal with these situations, and discusses the responsibilities of the involved parties.

THIRD PERSON MOTION

The type of game we are discussing is a third person 3D action game with sections involving running or walking. This includes such games as Zelda: Twilight Princess, Grand Theft Auto, Tony Hawk, Scarface, Harry Potter, Genji, Tomb Raider, and many other big name games. In these games, you see the character on screen, generally facing away from you, with the camera looking forward at a slight downward angle. Movement is controlled by pushing the controller stick in the direction you want to go. On PC games, similar control is achieved by pushing the WASD keys in the direction you want to go, sometimes with additional control of the camera by the mouse.

Although we are talking about a 3D game, the problem is essentially two dimensional, as we are concerned with the player’s movement across the ground. Since the camera moves up and down with the player, this essentially means we are dealing with motion in the XZ plane, which we can re-term the XY plane here to match the XY coordinates of our control stick. Here directions are represented by unit vectors, which is probably the most common method, but quaternions or Euler angles might also be used.

Now the basic code here is very simple. There are three directions, the direction of the camera’s forward view vector, the direction the player is facing, and the direction the player’s controller stick is indicating (with the controller stick, or simulated with direction keys such as WASD). The programmer has to take these three directions, and convert them into information that is used to move the player across the ground relative to the camera.

The simplest way to do this is to take the stick direction and rotate it by the camera direction, and then use this new direction as a velocity vector for the player, ignoring for now the facing direction of the player. In two dimensions this is trivially involves multiplying the x and y components of the stick direction by the view direction (view.x,view.y) and by the vector perpendicular to this (view.y, -view.x). See listing 1.

Listing 1 – calculate the desired direction from the view direction and the stick direction.

desired.direction.y = stick.y * (view.x, view.y)

desired.direction.x = stick.x * (view.y, -view.x)

COMPLICATIONS

So this is very simple so far. Where is the problem? Well, the problems occur when the programmer takes this “desired” direction, and applies it to the motion of the player’s character.

What we could do is simply take the desired direction, and set the player’s velocity to this direction. This actually gives the player very accurate control over their character, with the ability to instantly change direction. However, it is not very realistic looking, as the character will instantly snap to any new direction the player indicates.

To fix this lack of realism, the programmer or designer may reason that people always walk in the direction they are facing, so logically if they are moving, they should only move along their facing vector. If the facing vector is not the same as the desired vector, then the facing vector should be rotated at a natural looking rate towards the desired vector.

This all sounds very reasonable, and in fact a large number of games implement exactly this scheme. This leads us to the small problem I mentioned at the start of the article. The problem is that the player’s character walks in a circle. There are three reasons why this is a problem.

Firstly there is a disconnect between the player’s intentions and what actually happens. The player has indicated a desired direction. For the player they are not always indicating a direction in which they want their character to be facing, but instead they frequently want to walk towards a specific point in the world. Instead, their character will turn, walking forwards along the facing direction until the facing direction is parallel with the desired direction. The character walks in a circle, and will end up perhaps six virtual feet to one side of the desired path. The player now has to correct their direction again to point towards where they were indicating in the first place. Even worse, this inadvertent movement to the side of the path may put them in danger, perhaps dropping them off the side of a bridge (see figure 1, above).

Secondly, it introduces ambiguity. If the player’s character is facing in one direction the and player moves the stick to indicate 180 degrees in the other direction, then due to the various imprecisions, the character might do their six foot circle to the left or the right, with no feedback as to exactly why this direction was chosen. You can demonstrate this in many games by simply attempting to walk back and forth between two specific points, or along a line. Notice the lack of control, and the random nature of the turns.

Thirdly, despite the underly motivation for implementing it this way, it is actually NOT realistic. Try an experiment: get up and stand ten feet away from your chair, with your back to the chair. Then walk back to the chair. Try it now. Did you walk in a six foot circle and then correct your heading? No, you simply turned around, initially either by moving one foot backwards and turning it outwards 90 degrees and moving the other foot over it, or you moved one foot over the other about 45 degrees to the side then moved the other one to face backwards.

Try some more experiments to contrast what happens in real life with what happens in various video games. Walk back and forth along a line. Walk between two points. Run to a point and back. Humans do not walk in circles at constant velocity unless they are following a path. When a human makes a decision to change direction, they do this abruptly, leaning and pushing with a leg to very rapidly pivot their direction of travel. When the player quickly pushes the stick in a new direction, they want their character to move in that direction, just like in real life. This is one of the times in a video game where more physical realism is a good thing.

CIRCULAR THINKING

Why does this problem arise? The answer could be that the game developers put the game together rather quickly and did not have time to improve the controls. That excuse might be valid for a little casual game developed over a couple of months, but what of much more expensive mainstream games that cost millions of dollars to make and are in development well over a year? How did they end up with this inaccurate, ambiguous and unrealistic player control?

The answer is complex, and will vary from game to game. But the bottom line is that player control is often a shared responsibility and the problem arises through a lack of clear communication regarding what is actually wanted from each person. The game design document probably did not specifically address this issue. There was probably a diagram showing that the player would control their direction of motion in a camera relative manner with the left analog stick, or the WASD keys. But there is no detail beyond that.

Then the programmer and the animator come into the picture. The animator will supply idle, walk and run animations. The programmer implements code that matches the animation to the movement of the character. The animator is insistent that there is to be no sliding, that the character’s feet stay firmly planted on the ground during the walk cycle. Since turning on the spot in the walk animation results in sliding, the animator and programmer decide the way to solve this is to have the character always move along the forward facing vector, thus keeping the footsteps synced with the movement.

Perhaps instead the programmers come up with a very powerful scheme whereby the animators can fine tune the movement and rotation for each animation, and the designers can implement movement by playing the animations. The designers get various turn animations, including turning on the spot. But despite the power of this system it requires additional programming to actually implement swift turns for every situation, and the player is often left with ambiguous controls.

LETTING IT SLIDE

Why don’t the designers and producers, and the testers, and even the players, notice these problems? Why are they not addressed?

Different people involved have different goals. The programmer wants to implement the specification given to him and make it bug free and efficient. The animation wants her animations to look good. The game designer is concerned with a large number of issues. The testers have their hands full looking for bugs. The end users, who have paid for the game, have enough invested in the game to keep playing long enough to get used to the clunky controls. They learn to correct their heading after turning around. They learn to turn around very slowly if within six feet of danger. They get used to their characters running around in little circles and figure eights.

But inaccurate and ambiguous controls suck the life out of a game. The disconnect between intentions and actions prevents the player from becoming fully engaged in the flow of the game. The constant annoyances of unintended actions add up over time and contribute greatly to the tipping point where the player, consciously or unconsciously, decides a game is not worth playing any more, and hence neither is the sequel, and nor will they recommend it to their friends.

PROGRAMMER RESPONSIBILITIES

What is the responsibility of the programmer here? I mentioned before that the causes of this problem are often shared. But the programmer is often in a unique position to do something about it. The control programmer has the deepest understand of what is actually going on at the frame to frame level in the code. The programmer understands the interaction between the view direction, the stick direction, the facing direction and the desired direction. The programmer should know exactly how the animation system ties into the movement of the character, in relation to these four directions.

The programmer’s responsibilities here are to communicate this understanding to the other members of the team in a way that allows them to deal with the issues in a timely manner. The producers and the designers have the responsibility of making sure that the programmer does this.

Programmers sometimes work as is their only task is to implement the feature requests of the designers and technical directors. Programmers get a list of features, and they implement those features one at a time, tick them off and go home happy. But games are complex systems. When you implement a feature you are adding to the complexity of the system, and other features will inadvertently arise. When you implement the “walk along the forward direction, turning it towards the desired direction”, you are also implementing the “walk in circles” feature and the “make it difficult to walk to a point behind you” feature.

If a programmer is a step removed from the implementation of player control, then the situation is even worse. The programmer has created some system of defining player control with data or scripts, and handed it off to a designer. The designer may simply implement the “walk in circles” method simply because that is the only option. Here the programmer’s responsibility is to continue to be available to explain and update the system after it has been implemented to the initial specification. The producer needs to allot time for improvements to the system for many months after it is initially implemented.

CONCLUSIONS

I’ve kept this discussion as simple as possible, and as non-technical as possible. In reality the problem is really quite simple, and the solutions are simple. But time after time games are released with this problem.

The walking in circle problem is just one of many similar problems that crop up again and again. Players get stuck against lampposts, cameras snap oddly, players jump at the right time but still fall off the cliff, pressing a button a millisecond too early means the attack does not happen or you can’t turn for a fraction of a second after an attack. I could list player control problems like this forever.

Players are frustrated by these problems. But they keep playing the game, and work around them. To a similar extent so do the game designers. The reason being that they don’t really understand what is going on within the code, and so are unclear as to the causes of the problem, and even perhaps don’t really appreciate that there is a problem, since they cannot see how it might be addressed. Or perhaps they see the problem, but their “solution” is to make the bridge wider.

This is where the role of the programmer is of utmost importance. The programmer needs to communicate the way things work in a clear and concise manner that allows the designers to both appreciate the causes of the problem, and to find a solution. The programmer is also in a unique position to actually detect problems with the control implementation.

Players are very adaptable. They will work around a problem so intuitively that they will not perceive that there actually IS a problem. Instead they perceive a vague quality problem. The controls “don’t feel right” or they are “sloppy”. They can’t say what the problem it. But the programmer, with his unique insights into what is going on under the hood, at the state level, at the vector level, and at the millisecond level, should be able to see these problems, and it is his responsibility to raise them as issues, and to suggest and implement solutions.